How a Cornell University Virtual Reality research challenge was solved with Krikey AI Animation

How Cornell University's Virtual Reality lab used Krikey AI Animation for research on immersive experiences.

Summary

Krikey AI enabled two Cornell University research teams, the Virtual Embodiment Lab (VEL) and the Lab for Integrated Sensor Control (LISC) to collect underwater motion data for a research project on robotic assistants for scuba divers.

These data were to be integrated into a virtual environment described here: UnRealTHASC - A Cyber-Physical XR Testbed for Underwater Real-Time Human Autonomous Systems Collaboration; authors: Sushrut Surve, Jia Guo, Jovan Menezes, Connor Tate, Yiting Jin, Justin Walker, Silvia Ferrari to be presented at an upcoming conference: IEEE International Conference on Robot and Human Interactive Communication (IEEE RO-MAN 2024).

- Description: Cornell University Professors Silvia Ferrari and Andrea Stevenson Won and their teams were seeking to build a hydrodynamic motion model to help with the development of robot buddies for scuba divers. They needed a way to track underwater motion data of scuba divers and extract it accurately for analysis. PhD student Jiahao Liu used Krikey AI as a tool.

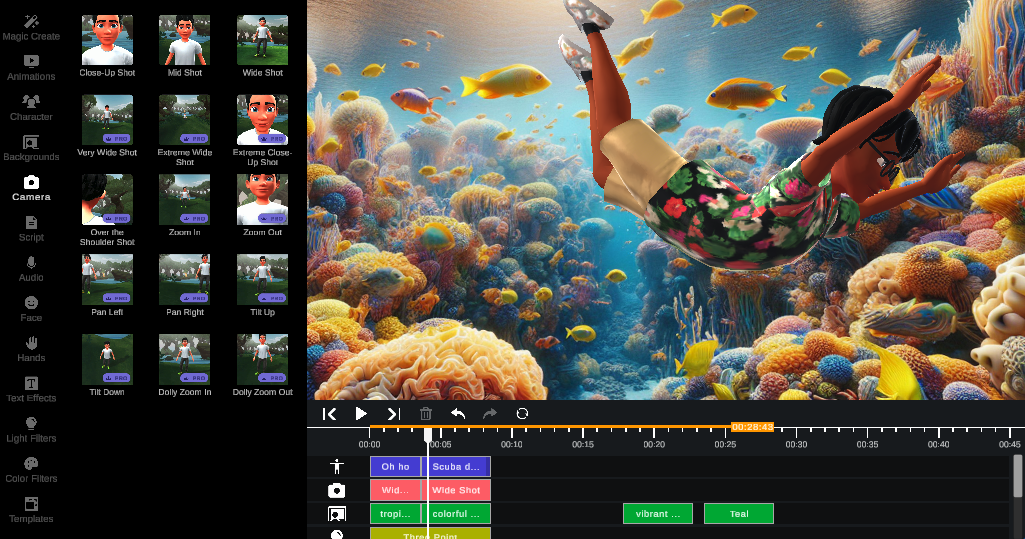

- Krikey AI features used: AI Video to Animation process, Animations, 3D Video editor, Camera Angles, FBX Export

"It's not a question of can we do it better and faster, it's can we do it at all?" ~ Professor Andrea Won, Cornell University

Challenge

- Reconstructing underwater motion data from motion capture datasets

- Using reconstructed data from videos in combination with XSense data to improve motion data accuracy from motion capture datasets

- Finding or creating new underwater datasets that aligned with the research specifications

Solution

- Krikey AI became an additional reference point for calculating motion using our AI video to animation tool

- Krikey AI enabled the research team to widen their data repository. The team can now potentially use existing video datasets of scuba divers, eliminating the time and cost of creating new datasets

- Krikey AI allowed export of the motion files for the team to use and further analyze seamlessly in other software tools

For the Cornell University Virtual Reality Lab, Krikey AI's powerful AI Video to Animation tool became instrumental in overcoming significant research hurdles. Krikey’s cloud infrastructure provided the high-performance compute and scalable storage necessary to process video data and export precise motion files. This Amazon Web Services (AWS)-backed foundation enabled faster setup and lower costs, accelerating Cornell’s innovative work.

ROI (Return on Investment)

After evaluating alternative software tools, Cornell University used the Krikey AI Animation tools to conduct research for this project.

Time and Cost for Virtual Animation

- 7x faster to set up Krikey AI than alternative virtual animation software tools

- 64% lower cost than alternative motion capture tools (example: A low cost, decent motion capture system can cost between $500 - $1000 not including the time cost of set up, Krikey AI Animation is $30 / month)

- Priceless: The ability to expand the video dataset beyond motion capture data

Accuracy and Efficiency of Virtual Animation

- Krikey AI allowed Cornell University researchers to more accurately identify the position, rotation and orientation metrics of divers’ limbs for underwater motion

- Instead of painting markers onto a diver and attempting to calculate distance traveled underwater, with Krikey AI Animation the research team now had more accurate motion data from AI Video to Animation conversion

- A future aim is to use existing archival video of scuba divers underwater and see the virtual animation motion data in minutes

About the Customer

Cornell University's Virtual Embodiment (Virtual Reality) lab is led by Professor Andrea Won. Her lab's research is focused on immersive media and how people perceive and experience it, including applications around nonverbal behavior, communication and collaboration. She completed her PhD at Stanford University in the Department of Communication, conducting research in the Virtual Human Interaction lab. Professor Won's PhD student at Cornell University, Jiahao Liu, is leading the research project to build a hydrodynamic model for robot assistants to scuba divers.

This project stems from a collaboration between the Lab for Integrated Sensor Control (LISC) led by Prof. Silvia Ferrari, and the Virtual Embodiment Lab (VEL) led by Prof. Andrea Won on human-agent teaming in underwater settings. To improve the ability of robot guides to work with divers, Jia Guo, post-doc at LISC, and Sushrut Surve, PhD student also of LISC, are working with Jiahao Liu, PhD student at VEL, and other team members to build a hydrodynamic model of scuba divers.

About Krikey AI

Krikey AI Animation tools empower anyone to animate a 3D character in minutes. The character animations can be used in marketing, tutorials, games, films, social media, lesson plans and more. In addition to a video to animation and text to animation AI model, Krikey offers a 3D editor that creators can use to add lip synced dialogue, change backgrounds, facial expressions, hand gestures, camera angles and more to their animated videos. Krikey's AI tools are available online at www.krikey.ai today, on Canva Apps, Adobe Express and on the AWS Marketplace!

The Challenge

The research team knew that human robot interactions were challenging. They set out to build a diving simulator to capture diver behavior and simulate different underwater challenges. They wanted to understand how people prefer robots to behave and for robots to understand human movement underwater.

Motion capture research underwater

At first, the Cornell University VR research team identified two ways to capture movements with virtual animation tools. First, a person in the simulation could wear a motion capture suit and swim through a virtual environment. Second, a person could wear the motion capture suit under a drysuit and swim through actual water, though this proved to be very technically and logistically challenging.

Neither one of these methods actually captured the motion metric of translation - how people move through the water. For this, they needed to build a hydrodynamic model. Given a certain set of movements and water conditions, how far would a diver be able to swim?

Current research process for motion data

Before using Krikey AI to measure body movement synchrony, the Cornell University team used motion capture data and 3D reconstruction software tools. However, once they tried Krikey AI they found it to be more accurate and convenient for their research methodology. Other software tools struggled to output animations or motion data quickly and if there was any occlusion (parts of the body covered by objects) then it was difficult to get accurate movement. It took the Cornell University research team 7+ days to set up alternative software tools. With Krikey AI they were able to try it out in less than an hour and immediately see results. They began by filming a video of themselves waving at the camera and converting it to animation. Once they saw the speed and accuracy of the AI Video to Animation tool, they began testing videos from their dataset.

Developing a hydrodynamic model of diver movement

The goal of the Cornell University research team was to train a robot assistant that can help scuba divers. To do this, they needed to build a hydrodynamic model of diver movement so they could better train the robot to move alongside the diver. They had to integrate three streams of data including: video, motion capture and reconstructed translation motion data. At first they were concerned because they had a limited dataset of underwater motion capture data and creating new datasets would take a lot of time and cost. Then they found Krikey AI Animation tools.

The Solution

Here is how the Cornell University Research team solved their research challenge using Krikey AI Animation tools.

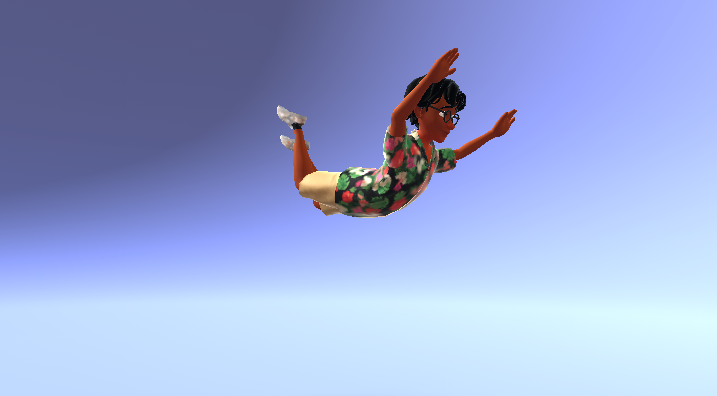

How Krikey AI replaced motion capture methodology

With Krikey AI, the Cornell University VR lab research team could take a single video that captures a diver's movement and use AI Video to animation to get the movement data in 3D space with an avatar. They could then compare the motion capture data from their original dataset with the Krikey AI video reconstruction data and see if it was aligned. This enabled them to ensure more accurate video data was pulled into their hydrodynamic model. In the future, they hope to generate simulated data for many different divers, making the training data for a robot "diving buddy" more diverse, accurate and robust.

Saving time and improving accuracy

With the dataset expanded and Krikey AI providing an easier methodology to calculate motion, the Cornell University research team could now calculate how a diver would move through the water including speed, depth, force of movement in water, distance and more. Krikey AI was a key software tool that brought together the different streams of data and calculations required. It enabled speed, accuracy and shortened the time to conduct the research.

Efficiency with interoperability

The Cornell University Virtual Reality research team had to integrate data from videos, motion capture suit trackers and combine these in Unity. Krikey AI helped them solve two problems. First, Krikey AI helped align the motion capture data with video data and became a source of truth reference for calculating motion. Second, in the future Krikey AI can enable the research team to pull movement data from archived videos of scuba divers, removing the need to create new datasets. With the ability to easily export FBX files to Unity, the VR research team was able to use Krikey AI Animation to identify key motion metrics in their research.

Cornell University researcher, Jiahao Liu, created a script to connect the rigs from motion capture and Krikey AI FBX files. He was quickly able to identify and accurately measure the motion metrics of position, rotation and orientation, which would be a helpful source for the hydrodynamic model.

How Krikey AI was used in the Cornell Virtual Reality Research Lab

Cornell University's Virtual Reality (VR) Lab research team used the Krikey AI Animation tool in a variety of ways to transform their research methodology.

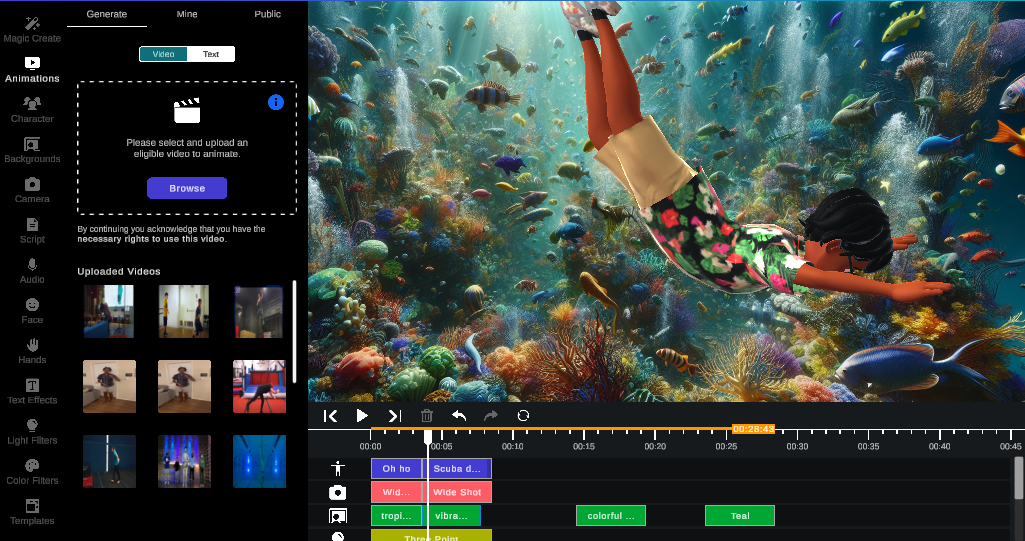

AI Video to Animation

Krikey's AI Video to Animation tool enabled the Cornell research team to use any video and convert it to animation rather than only relying on specific motion capture datasets. This expanded their data repository and helped strengthen the output of their hydrodynamic motion model.

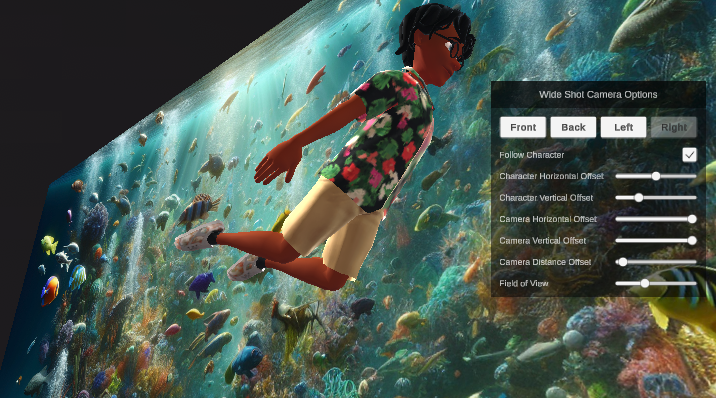

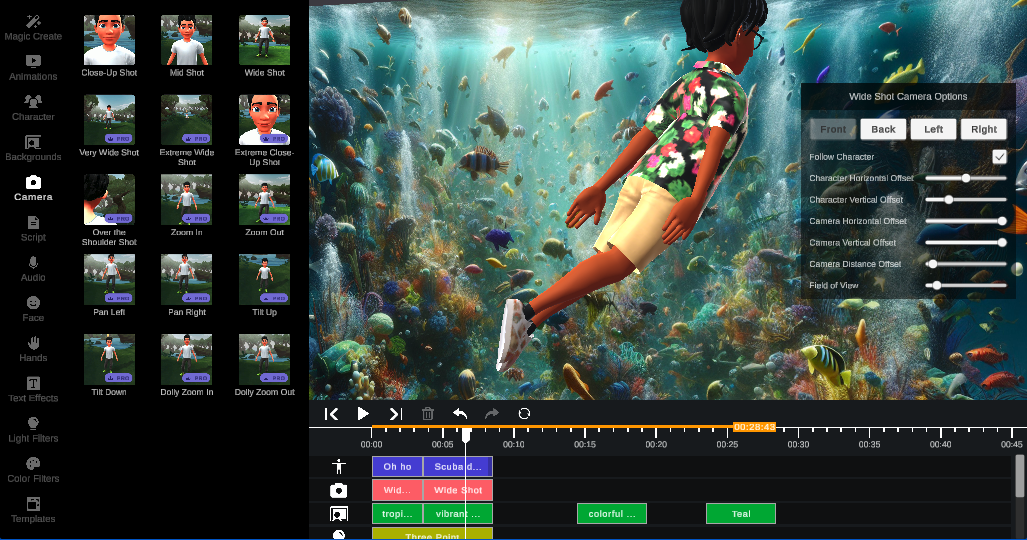

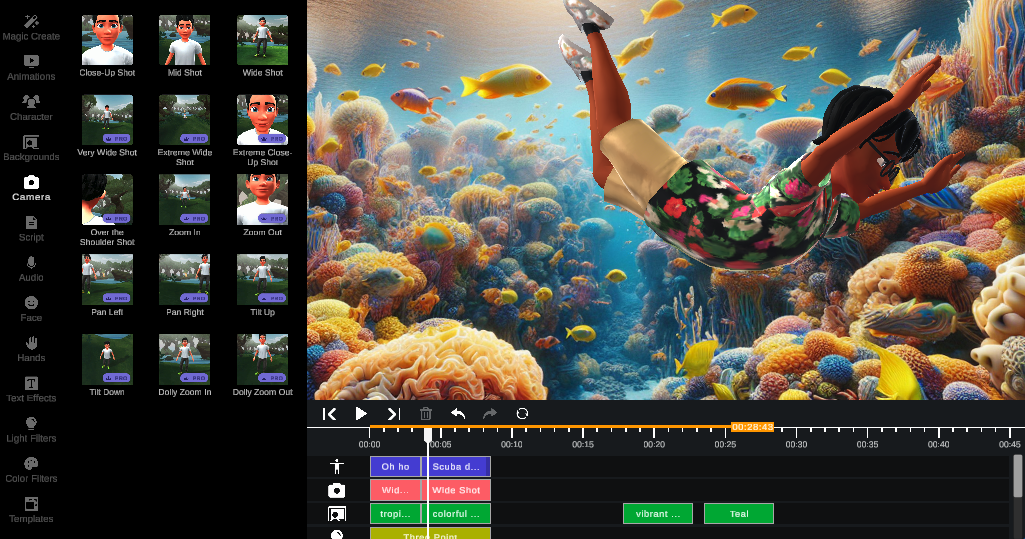

Camera Angles

Krikey AI's video editor enables customers to add camera angles so they can see any animation from a number of different angles and visualize it immediately in a viewer. This helped the Cornell research team ensure that their video to animation files were usable and visually inspected from multiple angles before being exported and converted to numbers in a sheet.

FBX Export

Krikey AI's FBX file export helped the Cornell Research team take their animations into the Unity software tool where they could further manipulate them, connect the data to their previous motion capture dataset (to strengthen the accuracy of that dataset) and also to extract key motion metrics. Krikey AI's animation outputs were easily interoperable with these additional software tools, making it easy and seamless for the Cornell University team to use Krikey AI in their research workflow.

"Krikey AI became a source of truth reference for calculating motion in our Cornell University research lab. AI Video to animation expanded our dataset and allowed us to work with existing video rather than spending time creating new datasets. The Krikey AI Animation tool has saved our team a lot of time and money in the process of doing this research project." ~ Professor Andrea Won, Cornell University